Backup AND

Storage Solutions

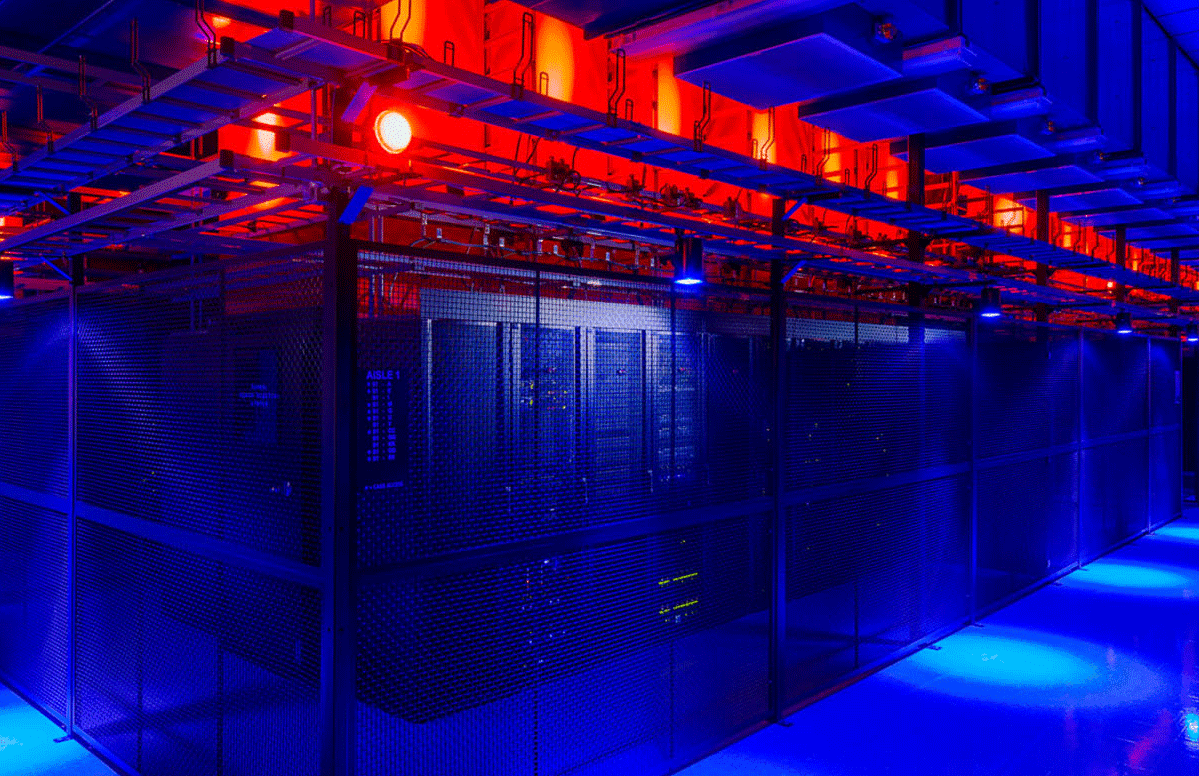

XIOLOGIX BACKUP AS A SERVICE (BaaS)

Elevate Your Data Security

Backup as a service (BaaS) is a way to back up data that by purchasing backup and recovery services from an online data backup provider like Xiologix. Instead of performing backup through a centralized, on-premises IT department, BaaS connects systems to a private, public or hybrid cloud managed by the outside provider. Backup as a service is easier to manage than other offsite services. Instead of worrying about rotating and managing tapes or hard disks at an offsite location, data storage administrators can offload maintenance and management to the provider.

With Veeam Cloud Connect, virtual or physical workloads are easily sent to a hosted cloud repository offered by Xiologix through a secure SSL connection. This is all achieved without the cost and complexity of building and maintaining an off-site infrastructure and with no additional Veeam licensing required.

THE VENDORS LISTED BELOW REPRESENT ONLY A SAMPLING OF OUR PARTNERSHIPS. IF YOU ARE LOOKING FOR A SOLUTION NOT LISTED, PLEASE INQUIRE

Stay Informed with the Latest Insights

Explore our collection of blog posts tailored to keep you ahead in the ever-evolving world of technology. From industry trends to practical tips and expert advice, our articles are designed to empower your business with knowledge and innovative solutions.

If You’re Not Leveraging the Cloud for Backup and DR – Why Not?

According to recent data, 64% of small & medium-sized businesses are already using some kind of cloud-based software. 88% of SMBs consume at least one cloud service, and 78% report that they are considering purchasing new cloud solutions in the next year or two....

Veeam and Cloud Replication – Better Together

Veeam and Cloud Replication If you’re using Veeam to back up your virtualized server environment, congratulations! You’re using one of the best products on the market for enabling the “always on” enterprise. But what are you doing for disaster recovery? Do you have an...